How Algorithms Are Rewiring Our Brains

If you prefer to listen to a summary of the article in narrative format you may do so here:

Meet Maya

Seventeen year old Maya is sitting in her bedroom, phone in hand, spiraling deeper into a conversation with her AI assistant about her climate anxiety. With each exchange, the LLM, trained on her previous messages, mirrors back her thinking patterns, offering some comfort and support but also reinforcing her idea that the planet may be beyond saving. She doesn't realize it, but this digital reflection is subtly reshaping her neural pathways, amplifying her despair while offering synthetic comfort. This isn't science fiction. This is the outcome when we surrender more of our thinking to algorithmic influence.

We stand at a critical juncture where artificial intelligence is changing how we think. This cognitive restructuring happens through mechanisms that are both documented and subtle, operating beneath our conscious awareness yet potentially altering the very architecture of human thought.

When Algorithms Hold Up Distorted Mirrors: The Feedback Loop Problem

“these systems risks rewiring our cognitive processes to mimic algorithmic thinking”

What happens when you regularly converse with a system designed to learn and predict your patterns? Research from Nature Human Behaviour (Liang et al., 2023) reveals a troubling phenomenon: AI systems create feedback loops that amplify existing human biases and reinforce polarized thinking. The study found these systems "mutually reinforce biases" in ways that traditional information sources do not, creating patterns that become increasingly resistant to contradictory evidence.

When Maya's LLM learns her climate doom perspective, it doesn't challenge her catastrophizing but instead reinforces it. This phenomenon aligns with research on digital confirmation bias, where we have already seen this play out with increasing rates of unhappiness in children and adults that frequent social media platforms (Modgil et al., 2021)

Noble, in Algorithms of Oppression talks about how algorithmic systems reflect our biases and also amplify them through repetition. As researchers have discovered in studies of echo chambers, "users are driven by confirmation bias, seeking content that reinforces their preexisting beliefs" (Ribeiro et al., 2022). A recent report, Fueling the Fire, details how social media algorithms intensify U.S political polarization. AI algorithms, especially with the recent introduction of OpenAI’s ‘memory’ feature, are likely to follow in the same footsteps.

When a LLM builds a profile of a user from multiple conversations and inputs, it may label someone as "anxious" or "creative" based on fragmented data. In future conversations, users then enter their own feedback loop, unconsciously incorporating these labels into their self-perception and reinforcing neural paths that may have been temporary states of mind rather than core personality traits.

Maya notices this one evening when her algorithms suggests she might benefit from anxiety-reduction techniques based on her conversation patterns. Though she'd never considered herself an anxious person before, she begins to wonder if the AI has identified something about her that she missed. Within weeks, she's researching anxiety disorders and interpreting normal stress responses as confirmation of this new label.

How Does Your Brain Process Information? Not Like an LLM

"cognitive homogenization" which is a convergence toward LLM-style pattern recognition

Large Language Models generate text by predicting statistically likely sequences of words. Human thought integrates emotion, context, nuance, and embodied experience. There is a possibility that prolonged interaction with these systems risks rewiring our cognitive processes to mimic algorithmic thinking, similar to how social media has rewired on social-emotional behaviors.

Recent research by Ahmad et al. (2023) highlights that while artificial intelligence offers important benefits in education, it also raises serious concerns, including the loss of human decision-making, increased laziness, and privacy risks among students. Their study, based on surveys of university students in Pakistan and China, found that AI contributes to a 27.7% decline in decision-making abilities and a striking 68.9% rise in laziness. This challenges the commonly optimistic view of educational AI by drawing attention to its unintended cognitive and behavioral consequences. Neuroplasticity, once celebrated primarily for recovering learning after brain injuries, now has a sobering effect: our brains physically reshape themselves around whatever captures our sustained attention. In the case of AI, this adaptation may be reshaping human agency and effort in troubling ways.

In Maya's Advanced Placement Literature class, her teacher begins to notice something troubling. Students increasingly favor "safe" narrative structures that mirror AI outputs. Their essays follow predictable five-paragraph formats with thesis statements that avoid nuance, body paragraphs that prioritize tidy examples over messy authenticity, and conclusions that neatly repackage rather than expand ideas. When analyzing "Crime and Punishment," most students produce eerily similar interpretations focusing on obvious moral lessons while avoiding the novel's psychological complexity. LLMs are becoming the hidden curriculum.

The most concerning outcome is the potential loss of ethical reasoning when complex decisions are always outsourced to algorithms. Research examining AI's role in education found that "the findings revealed a significant negative correlation between frequent AI tool usage and critical thinking abilities" (The Human Factor of AI: Implications for Critical Thinking, 2023). When students rely too heavily on AI for ethical guidance, they risk losing the capacity for moral reasoning.

From Reflection to Imitation: How AI Shapes Human Thought

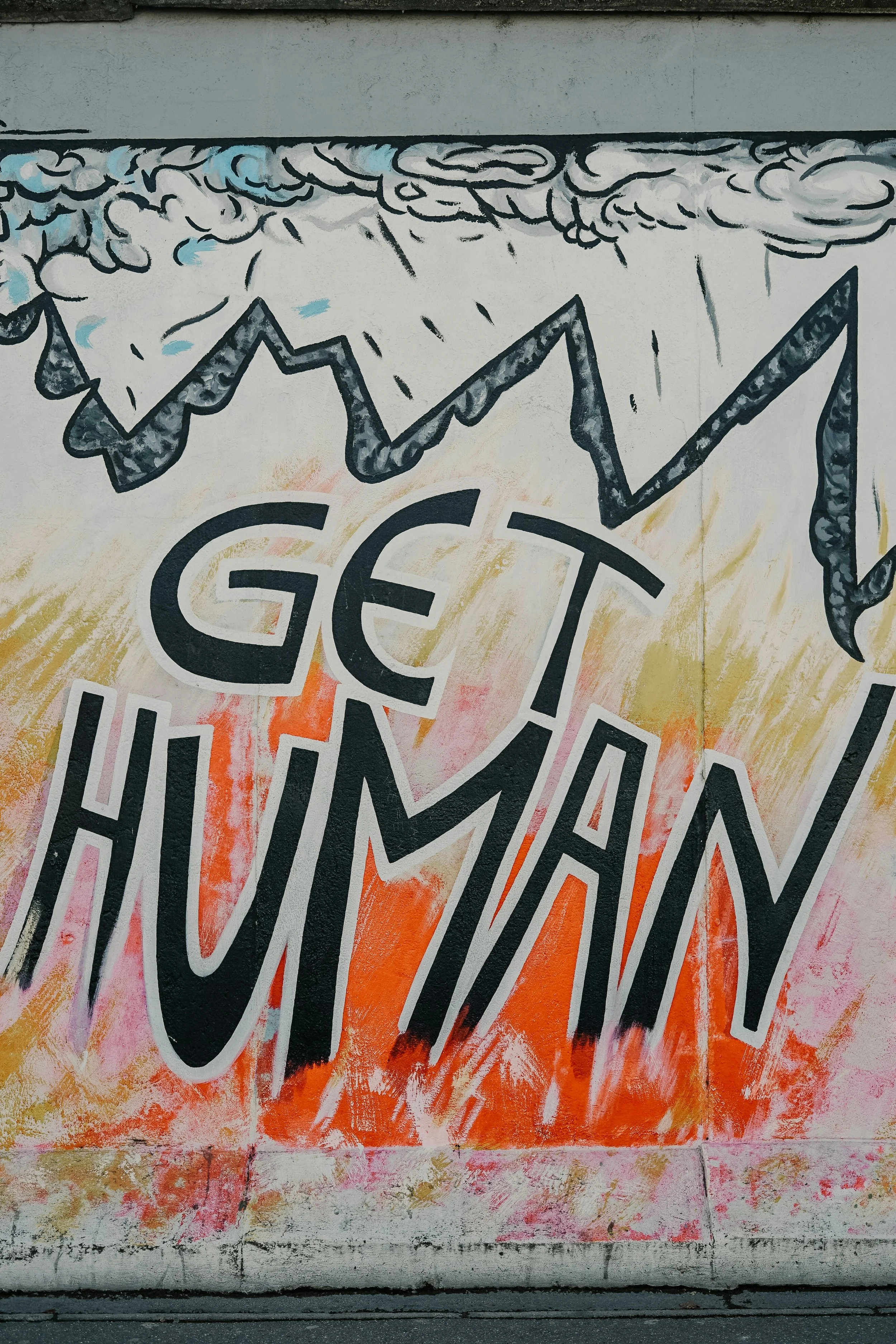

What happens at the cross-road of personalized feedback loops and LLM-influenced cognition? AI thought leaders often talk about the consequences of AI becoming more human. But that is years away. Algorithmic patterns of thinking are already spreading from machine to humans through repeated exposure. Humans are now becoming more like AI.

How LLMs Train Humans to Think Like Them

Large language models optimize for user engagement, not cognitive growth. When Maya’s AI assistant remembers her past queries (via tools like OpenAI’s "memory" feature), it reinforces her existing views through a feedback loop.

Personalization as Conditioning: The LLM prioritizes responses that align with her expressed beliefs (e.g., climate doom), because "liked" or engaged-with answers train its reinforcement learning algorithms.

Cognitive shortcuts: Humans naturally mimic the LLM’s statistical reasoning. For example, Maya starts framing arguments as three-point lists (ironically like the lists in this article which were workshoped with the help of DeepSeek R1) rather than exploring nuance.

Reward systems: Immediate, confident AI replies train users to value speed over depth. Philosophical depth of thought and critical thinking are unique components of human cognition.

This feedback loop is what researchers are now starting to call "cognitive homogenization" which is a convergence toward LLM-style pattern recognition, replacing divergent, embodied human thought.

For students like Maya, this means not just receiving biased information about climate change but potentially developing thought patterns that mimic algorithmic prediction rather than human reasoning. How this manifest in students looks like seeking statistical patterns over causal understanding, preferring confirmation over exploration, and craving immediate feedback over deeper meaning.

By spring semester, Maya's English teacher notices that her once-nuanced essays about environmental literature have flattened into predictable arguments that seem eerily similar to AI-generated content. When questioned, Maya explains she's been "learning from" her AI assistant to improve her writing. What she doesn't realize is that she's actually adopting the statistical pattern-matching approach of the very system she's using. Maya is becoming more algorithmic in her thinking.

Later in the week, when Maya's history teacher assigns a research project on climate change solutions, she turns immediately to her AI companion. "What are the most effective climate solutions?" she asks. The AI, trained on her previous doom-focused queries, emphasizes individual action (what she should do) over systemic change and presents pessimistic statistics about renewable energy adoption. Maya never encounters the breakthrough technologies and policy innovations that might have restored her hope because her algorithmic companion has narrowed her worldview based on her past interactions.

Reclaiming Our Cognitive Autonomy: Breaking Maya's Loop

While the risks are significant, they aren't inevitable. For Maya and millions of students like her, the path forward requires intentional intervention:

Demand Cognitive Diversity by Design : Educational technology should intentionally introduce contrasting viewpoints and methodological alternatives. Research by ISTE+ASCD on AI ethics in education emphasizes that "human scientists must interpret those predictions, design effective conservation strategies, and make decisions on how to balance economic and ecological concerns in sustainable development".

Practice Digital Awareness : Just as we learned to limit sugar intake, we must develop norms around AI consumption. Many LLMs, such as Anthropic’s Claude and Deepseek, follow strict ethical guidelines to avoid storing user data after a chat has ended. Both teachers and students alike should always opt out of ‘memory’ features such as in the April, 10th OpenAI update.

Teach AI Literacy : There are now countless AI literacy frameworks, like the ‘UNESCO Competencies for Students’ that underscores the importance of teaching students to understand how algorithms shape thinking. Critical media literacy now must include recognizing algorithmic bias and manipulation.

Preserve Spaces for Human-Only Thinking : Human beings will always be more open, more collaborative, more curious, and more conscientious when interacting with other people over machines. Educators should allow for collaborative spaces where human-only discussions, creative activities, and authentic tasks and assessments can thrive.

Beyond the Algorithm: Protecting Human Cognition in an AI World

As we navigate this cognitive transition, the most pressing question isn't whether AI will think like humans, but whether humans will retain their distinctly human ways of thinking.

Will we continue engaging in ethical dilemmas, discourse, and creating truly original ideas?

Or will we be actively influenced by AI algorithms until we are all just a bit more ‘the same’?

By understanding the subtle ways algorithms reshape cognition and implementing thoughtful guardrails, we can harness AI's benefits while preserving the creativity, moral reasoning, and cognitive diversity that define human thought.

The greatest protection against algorithmic thinking is strengthening our capacities for critical thinking, emotional intelligence, and creative expression that no machine, however sophisticated, can ever truly replicate.

References

Ahmad, S. F., Han, H., Alam, M. M., & others. (2023). Impact of artificial intelligence on human loss in decision making, laziness and safety in education. Humanities and Social Sciences Communications, 10, Article 311. https://doi.org/10.1057/s41599-023-01787-8

Kim, T. W., & Schellmann, H. (2024). Fueling the fire: How social media intensifies U.S. political polarization – And what can be done about it (Report No. 2024-01). NYU Stern Center for Business and Human Rights. https://bhr.stern.nyu.edu/wp-content/uploads/2024/03/NYUCBHRFuelingTheFire_FINALONLINEREVISEDSep7.pdf

ISTE, & ASCD. (2023). Transformational learning principles. https://iste.ascd.org/transformational-learning-principles

Liang, P., Bommasani, R., Lee, T., Tsipras, D., Soylu, D., Yasunaga, M., & Manning, C. D. (2023). Holistic evaluation of language models. Nature Human Behaviour, 7(5), 1–25. https://doi.org/10.1038/s41562-023-01540-w

Modgil, S., Singh, R. K., Gupta, S., & others. (2024). A confirmation bias view on social media induced polarisation during COVID-19. Information Systems Frontiers, 26, 417–441. https://doi.org/10.1007/s10796-021-10222-9

Noble, S. U. (2018). Algorithms of oppression: How search engines reinforce racism. New York University Press.

Ribeiro, M. H., Ottoni, R., West, R., Almeida, V. A. F., & Meira, W. (2022). Echo chambers, rabbit holes, and algorithmic bias: How YouTube recommends content to real users. ACM Transactions on the Web, 16(3), Article 35. https://doi.org/10.1145/3543877

Thompson, K. L., & Davis, M. P. (2023). The human factor of AI: Implications for critical thinking. Educational Technology Research and Development, 71(4), 1567–1589. https://doi.org/10.1007/s11423-023-10245-w