Don’t Wait: Why Higher Education Needs AI Ethics and Readiness Training Now

Many organizations have begun to shift toward hybrid teams of humans and AI agents, and the pace is accelerating. 81% of leaders expect moderate to extensive integration of agents into their AI strategy within the next 12–18 months. Nearly a quarter reported that AI is already embedded across their organization, while another 12% are actively piloting it.

AI and big data are expected to be the fastest-growing skills through 2030, followed by networks and cybersecurity and technological literacy. A majority of leaders claim they would hire candidates with less experience but strong AI skills over those with more experience but no AI skills.

Whether a learner is entering healthcare, education, energy, manufacturing, government, or business, AI will likely reshape that field. All students in higher and continuing education programs must be equipped with the competencies to use AI responsibly and effectively.

AI skills are already decisive in workplace readiness, hiring, and advancement. Yet, curricula often lag behind. Are we leaving graduates underprepared?

Some institutions are racing to add prompt engineering workshops, tool tutorials, and courses on practical AI applications in response to industry demand for graduates who can use AI and contribute meaningfully to their organizations. However, this rapid expansion has created a significant gap: many programs teach students how to operate AI tools long before they teach them how to evaluate those tools or the outputs they generate. Compounding this issue, opportunities for practical AI training vary widely across institutions, leaving learners with uneven exposure and preparedness.

Amid ongoing debates about whether, and to what extent, students should use AI in their coursework, targeted instruction on identifying biased outputs, spotting inaccuracies, and engaging in ethical practices remains largely missing. As a result, many graduates leave programs either entirely self-taught or with only surface-level, tool-based exposure. They may be able to generate outputs with AI, but they are far less prepared to question, critique, or challenge those outputs when they are inaccurate, harmful, or misleading.

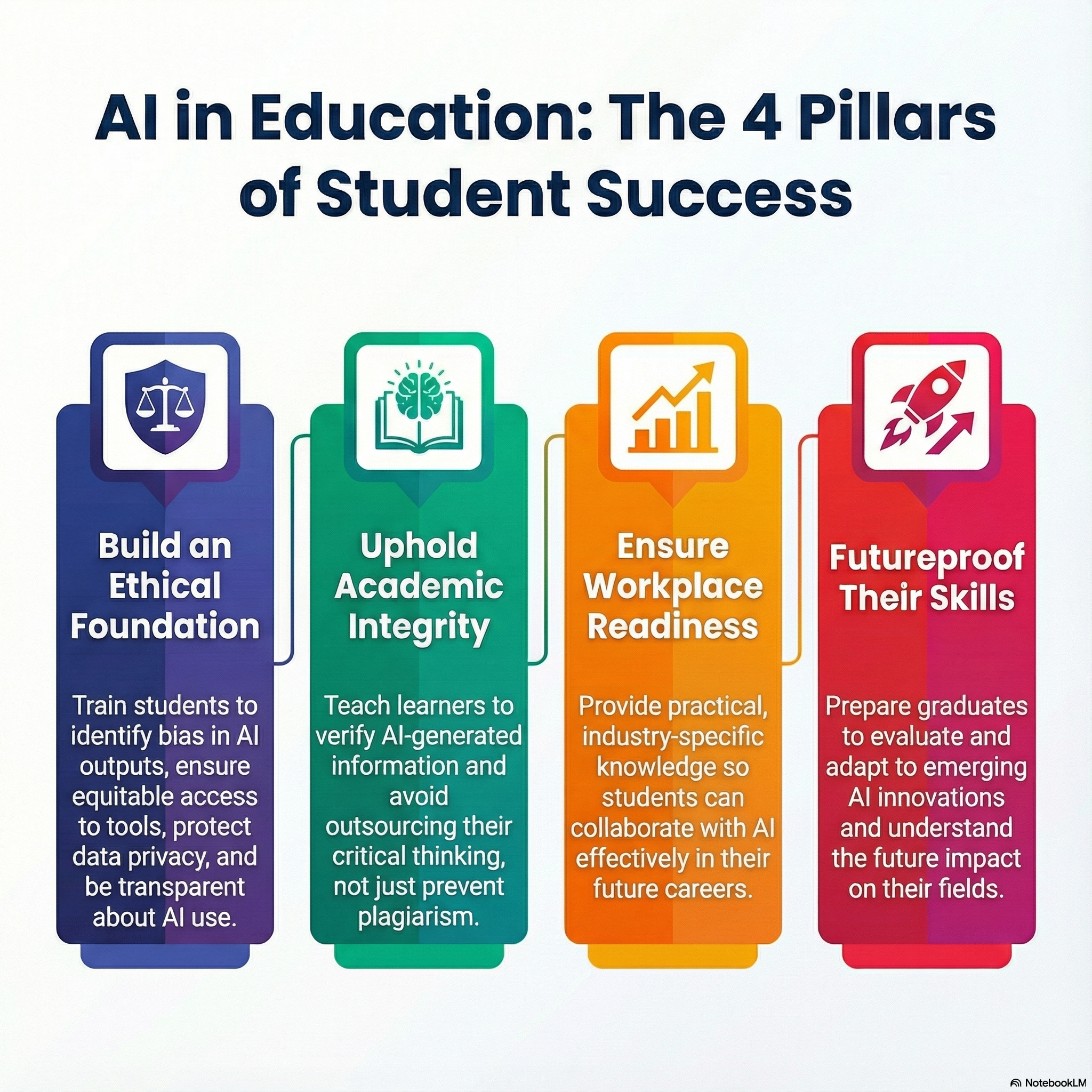

4 Key Areas Every Program Must Address

1. Ethics

Ethics must be foundational in AI education. Since AI systems are trained on human-created data, outputs often mirror human biases. Users need explicit training in how to identify, question, and counteract biased or harmful outputs. For example, health datasets can introduce racial bias when minority groups are underrepresented in the raw data, leading to skewed predictions and unequal treatment in downstream AI models. Identifying biases in AI outputs requires both iterative prompting strategies and human-led revisions to ensure accuracy, fairness, and contextual appropriateness.

Equity is also an ethical concern. Access to AI tools varies widely across socioeconomic backgrounds, and basic access to technology is not guaranteed for all learners. If AI enhances learning or fieldwork, programs must ensure equal access and examine the systemic inequities that shape who is able to benefit. Nearly one-third of the world’s population remains underserved in terms of internet connectivity, particularly in rural and remote regions, highlighting how the digital divide continues to limit who can learn with or even study AI in the first place. This makes it essential for higher and continuing education not only to embed ethical reflection on access, but also to invest in the infrastructure, resources, and supports that ensure AI-enhanced instruction does not widen existing inequalities.

Privacy and data security are not only technical considerations but also ethical ones. Students should be taught how to protect their own and others’ information when interacting with AI tools and to recognize the ethical implications of mishandling sensitive data. For example, when users enter information into a free ChatGPT account, OpenAI may use the content, such as prompts, responses, and file uploads, to train and improve its models unless the user opts out or is using ChatGPT Enterprise or the API, where data is not used for training. Similarly, Google Gemini typically stores and uses conversation data to improve its systems unless the user opts out or is operating under a Google Workspace account, and even when opted out, Gemini chats may be retained for up to 72 hours for safety and security processing. Security breaches, data scraping, and model vulnerabilities remain real risks. Learners must understand ethical practices such as removing identifying information, safeguarding others’ privacy, and critically questioning how and why their data may be used.

Finally, transparency should be standard practice, and it is an ethical obligation. When learners do use AI, whether for brainstorming, revising, or generating drafts, they should clearly articulate when and how it was used so that instructors and employers can understand the origin of the work and the reasoning behind it. Since access to AI tools varies across higher and continuing education settings, transparency also helps institutions identify where additional guidance, resources, or support may be needed. Ethical transparency reinforces accountability, respects the integrity of the learning process, and enables schools and organizations to accurately assess a learner’s or employee’s thinking, skills, and growth. AI can support learning, but users must still verify its accuracy and remain accountable for any errors, hallucinations, or misrepresentations it produces.

2. Integrity

Integrity now extends beyond avoiding plagiarism. It involves understanding responsible AI use and recognizing when others are using AI inappropriately. For example, a lawyer who relied on ChatGPT to draft a legal motion submitted citations to cases that did not exist. When the error was discovered, he faced disciplinary action. Incidents like this show how critical it is for professionals to verify AI outputs and uphold ethical standards, not only to protect themselves, but also the clients and communities they serve.

Students must learn not to outsource their critical thinking. In fact, critical thinking is essential for effective AI use. For example, Caulfield and Wineburg’s (2023) SIFT model of Stop, Investigate, Find better coverage, and Trace claims, offers a useful framework for evaluating information. AI users should routinely apply these practices because they strengthen research skills and protect learners from believing or sharing misinformation or unintentionally plagiarizing.

Ultimately, integrity in the age of AI requires intellectual curiosity, discipline, and a commitment to deep understanding, not simply efficient output.

3. Readiness

Together with ethics and integrity, learners need practical knowledge of how AI is used in their industries. A baseline familiarity with major AI systems relevant to their field can help students stand out among peers and even among professionals who have not yet received AI-focused training. Programs should prepare learners to collaborate with AI, not fear being replaced by it, by highlighting how AI is reshaping roles, workflows, and expectations and by helping students understand both the strengths and limitations of the tools they will encounter in the workplace. When students develop practical, discipline-specific AI skills and have opportunities to apply them, they are better prepared to adapt to emerging technologies and drive innovation within their fields.

4. Futureproofing

In addition to developing practical skills, programs must also prepare learners for emerging innovations. Students must engage in forward-looking conversations about AI’s evolving impact. What tools are emerging? How might roles and responsibilities change? What ethical concerns will shape future policy?

Programs cannot limit instruction to current tools alone. Instead, they must prepare learners for the next wave of innovations. Graduates will shape the future of work, and their choices, behaviors, and competencies will influence how society navigates AI’s opportunities and challenges. Preparing students to evaluate new AI capabilities as they emerge ensures they can identify potential issues early, adapt responsibly, and guide their organizations through technological change.

Faculty and academic leaders must engage directly with industry professionals to understand the realities of the modern workplace

What Now?

AI is advancing quickly, and education must keep pace. Every program in higher and continuing education should integrate AI training, either through a dedicated course in the discipline or through embedded modules within existing classes (for example, AI & Ethics in Nursing or AI in Construction Management).

Now is the time for departments across higher and continuing education to take stock of both their own research and the growing body of scholarship on AI. Faculty and academic leaders must engage directly with industry professionals to understand the realities of the modern workplace, identify emerging skill demands, and refine their own expertise through ongoing AI upskilling. As AI continues to evolve, all of us, educators, researchers, and practitioners, must build on our existing knowledge to adapt to new expectations and responsibilities.

For institutions, this means developing structures that support continuous learning, interdisciplinary collaboration, and responsible innovation. It means empowering instructors with professional development on AI, embedding ethical frameworks across curricula, and building feedback loops with employers to ensure programs remain relevant and rigorous.

As these conversations and efforts take shape, learners and educators must also play an active role in advocating for what they need. Students, professionals, and instructors should be asking their institutions: Where is the AI ethics and integrity module for our program?

Preparing learners now ensures they will enter the workforce ready not only to use AI, but to shape its future with ethics, integrity, readiness, and foresight.

About the Author

Dr. Athena Stanley helps schools and universities integrate AI in ways that preserve human judgment. As founder of Athena Global Learning, she works with institutions on AI literacy and curriculum development. Her approach draws on 16 years teaching across K–12 and higher education in Ecuador, Turkey, China, and the U.S.. This experience has shaped her conviction that ethical AI integration requires understanding how learning actually happens in classrooms.

References

Adams, L., & Eckard, D. (2024, August 17). The digital divide persists. Now is the time to close it. Forbes. https://www.forbes.com/sites/nokia-industry-40/2024/08/12/the-digital-divide-persists-now-is-the-time-to-close-it/

Caulfield, M., & Wineburg, S. (2023). Verified: How to think straight, get duped less, and make better decisions about what to believe online. The University of Chicago Press.

Intel. (n.d.). Artificial intelligence (AI) use cases and applications. https://www.intel.com/content/www/us/en/learn/ai-use-cases.html

Jindal, A. (2022). Misguided artificial intelligence: How racial bias is built into clinical models. Brown Hospital Medicine, 2(1), 38021. https://doi.org/10.56305/001c.38021

Microsoft. (2025, April 23). 2025 work trend index annual report: The year the frontier firm is born. https://www.microsoft.com/en-us/worklab/work-trend-index/2025-the-year-the-frontier-firm-is-born

Microsoft & LinkedIn. (2024, May 8). 2024 work trend index annual report: AI at work is here. Now comes the hard part. https://assets-c4akfrf5b4d3f4b7.z01.azurefd.net/assets/2024/05/2024_Work_Trend_Index_Annual_Report_6_7_24_666b2e2fafceb.pdf

Mollman, S. (2023, November 17). A lawyer fired after citing ChatGPT-generated fake cases is sticking with AI tools: ‘There’s no point in being a naysayer’. Fortune. https://fortune.com/2023/11/17/lawyer-fired-after-chatgpt-use-is-sticking-with-ai-tools/

World Economic Forum. (2025). Future of jobs report 2025: Insight report January 2025. https://reports.weforum.org/docs/WEF_Future_of_Jobs_Report_2025.pdf