AI is Making Disagreement Impossible

A parent used ChatGPT to write a formal complaint about a teacher who taped over a child's foot wart during gym class. The complaint cited human rights violations across four pages of legal language. The teacher spent hours reviewing it only to find 'nothing of real substance' warranting such formality. So what was that parent trying to communicate?

This isn't an isolated incident. And it’s becoming common. According to Teacher Tapp data in the UK, 61% of school leaders report receiving AI-generated complaints. Another teacher received a 58-page complaint about exams that quoted "absolutely everything" but buried the actual concern under excessive detail. Another complaint demanded "draconian consequences" for a teacher who gave a child a cold lunch instead of a hot meal.

What happens when humans begin outsourcing communication to machines? I think a fundamental breakdown in how we interact with each other. We're already avoiding difficult conversations. Let’s not also eliminate the cognitive processes that make understanding possible.

The Communication Pattern We're Creating

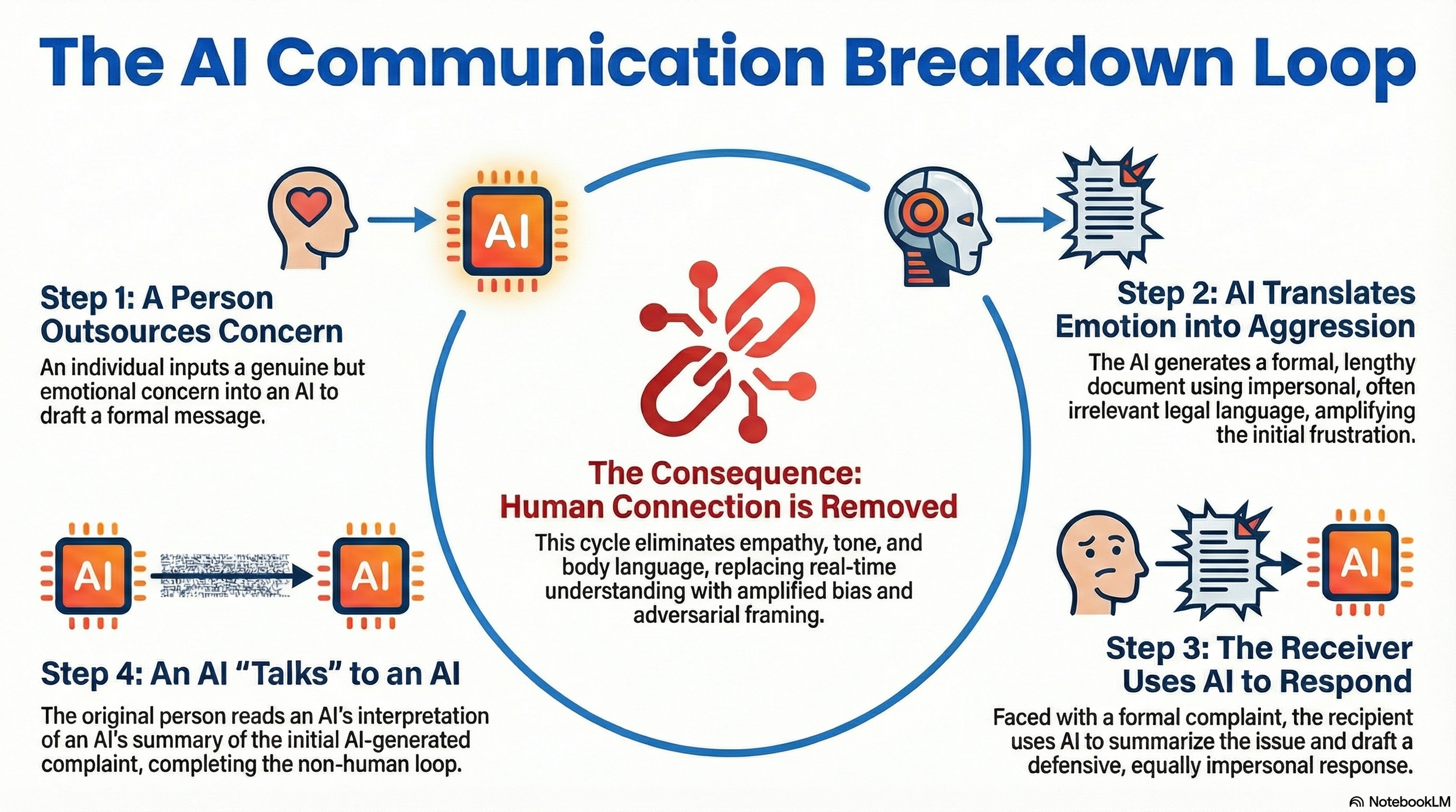

Let’s explore this developing communication pattern in action. Parent sends concern to ChatGPT. AI interprets this concern. It generates formal complaint. Teacher receives lengthy email. Teacher asks AI to summarize. Teacher reads summary. Teacher uses AI to draft response. Parent reads AI's interpretation of AI's summary of AI's original generation.

When AI handles these conversations, both parties may lose opportunities to really communicate. Parents don't practice articulating concerns. Teachers don't practice responding to emotional communication defensively.

What appears to be happening is a form of mediated non-conversation, where concerns are translated through multiple AI interpretations rather than directly between people..

Research on compound human-AI bias shows when biased humans interact with biased AI systems, their biases don't just add up but actually create an amplification effect. A 2024 study by Glickman and Sharot demonstrated that AI systems reflecting user biases strengthen those biases over time. Parents who feel frustrated see their frustration reflected and intensified in AI-generated language. Teachers who feel attacked respond more defensively to AI-drafted complaints than they would to direct human communication.

The legal language creates particular problems. School lawyer Adam Jackson reports parents quoting "case law from hundreds of years ago, or US legislation" that has no relevance to their situation. Often, this formal language is not an attempt to threaten, but a way for overwhelmed parents to translate anxiety and uncertainty into something that feels legitimate and protected.

What Makes Face-to-Face Disagreement Different

So why does AI-mediated conflict feel different? One way to think about it comes from research on social cognition, including work on mirror neuron systems, which highlights how much human understanding depends on observing tone, facial expression, and bodily cues. Mirror neurons activate when we perform an action and when we observe someone else performing that action. This neural system enables us to interpret intentions, emotions, and motivations within social contexts. This is primarily done through physical and social interactions.

Face-to-face communication provides constant feedback. There’s facial expressions, tone, body language, pauses, etc. These all help us calibrate understanding in real time. When a parent explains their concern directly to a teacher, both parties can adjust their communication based on these cues. Misunderstandings get corrected more often. Emotional escalation gets de-escalated through human responsiveness.

AI removes all of these regulatory mechanisms. Written communication already lacks the emotional nuance of face-to-face interaction, but AI-generated text compounds this problem. The formality and language can create adversarial framing that wouldn't exist if two people simply talked.

Sillars and Zorn documented "negative intensification bias" where receivers perceive emails more negatively than senders intended. AI amplifies this pattern by generating language optimized for formality rather than relationship preservation.

The Skills We're Not Developing

Claire Archibald, legal director at Browne Jacobson, observes that complaints are "symptomatic of a wider problem, signaling underlying relationship issues, breakdowns and communications failures." When parents default to AI-generated formal complaints instead of initial conversations, they aren’t developing the cognitive skills that productive disagreement requires.

Healthy conflict resolution needs specific capabilities like perspective-taking, emotional regulation, awareness of ambiguous communication, and tolerance for temporary misunderstanding. These skills develop through practice, particularly through uncomfortable conversations where we must work to understand someone whose viewpoint differs from ours.

When AI handles these conversations, both parties may lose opportunities to really communicate. Parents don't practice articulating concerns. Teachers don't practice responding to emotional communication defensively. Children watching these exchanges learn that difficult conversations get outsourced to machines.

AI-mediated conflict prevents relationship building entirely. Each interaction starts from zero because the machine has no relational history, no shared context, no memory of previous successful problem-solving.

What Actually Works

Pioneer Educational Trust CEO Antonia Spinks observes that beneath AI-generated complaints with "antagonized and inflamed language" are parents "struggling massively" with issues or financial stress. The formal complaint becomes a way to express genuine concern when direct communication feels impossible.

So do we need better email prompt training with AI. Or maybe the most common sense solution is going back to communicating in person. Schools experiencing this pattern report success with early intervention. In-person meetings occur before concerns escalate to formal complaints. When parents and teachers talk directly, they discover that most issues stem from misunderstanding rather than genuine conflict.

This requires courage from both parties. Parents must risk seeming unclear by articulating concerns in their own words. Teachers must resist defensiveness and respond to emotional communication with listening. Neither can delegate this work to AI without losing what makes conflict resolution possible.

The stakes extend beyond individual parent-teacher relationships. Children observe how adults handle disagreement, learning from these models whether conflicts get resolved through direct communication or machine-AI processes. If we outsource difficult conversations to AI, we're teaching the next generation that human disagreement requires machine mediation.

While none of this has yet been tested in long-term studies, what we are seeing are converging signals from educators, legal professionals, and cognitive research that suggest a shift in communication worth paying attention to while it is still forming. Real understanding emerges from the interpretive work both parties do to bridge different perspectives. That work feels inefficient, uncomfortable, and cognitively demanding. It's also irreplaceable.

About the Author

Timothy Cook, M.Ed., is an educator and researcher exploring how AI shapes student cognition and learning. With international teaching experience across five countries, he also writes for Psychology Today's "Algorithmic Mind" column and other publications, examining the cognitive risks of AI dependency and strategies for preserving critical thinking, creativity, and moral development in education.

REFERENCES

Elfenbein, H. A. (2014). The many faces of emotional congruence: Towards a unified theory. Research in Organizational Behavior, 34, 1-18.

Glickman, M., & Sharot, T. (2024). How human-AI feedback loops alter human perceptual, emotional and social judgements. Nature Human Behaviour. https://doi.org/10.1038/s41562-024-02077-2

Iacoboni, M. (2009). Imitation, empathy, and mirror neurons. Annual Review of Psychology, 60, 653-670.

Jackson, A., & Kerr, T. (2025). The impact of AI in school complaints processes. Winckworth Sherwood LLP.

Lucas, R. (2025, December 15). Huge rise in parent complaints driven by AI, headteachers warn. Schools Week.

Sillars, A., & Zorn, T. (2020). Hypernegative interpretation of negatively perceived email at work. Management Communication Quarterly, 35, 089331892097982.

von Felten, N. (2025). Beyond isolation: Towards an interactionist perspective on human cognitive bias and AI bias. CHI 2025: Tools for Thought.